BRAINS IN BRIEFS

Scroll down to see new briefs about recent scientific publications by neuroscience graduate students at the University of Pennsylvania. Or search for your interests by key terms below (i.e. sleep, Alzheimer’s, autism).

A new discovery for how DNA unwinds in neurons to support brain health and memory

or technically,

Histone variant H2BE enhances chromatin accessibility in neurons to promote synaptic gene expression and long-term memory

[See original abstract on Pubmed]

Emily Feierman was the lead author on this study. Emily is currently a postdoc in the labs of Kiran Musunuru and Becca Ahrens-Nicklas at Penn/CHOP developing gene editing therapies for rare pediatric metabolic disorders.

or technically,

Histone variant H2BE enhances chromatin accessibility in neurons to promote synaptic gene expression and long-term memory

[See Original Abstract on Pubmed]

Authors of the study: Emily R Feierman, Sean Louzon, Nicholas A Prescott, Tracy Biaco, Qingzeng Gao, Qi Qiu, Kyuhyun Choi, Katherine C Palozola, Anna J Voss, Shreya D Mehta, Camille N Quaye, Katherine T Lynch, Marc V Fucillo, Hao Wu, Yael David, Erica Korb

While most of us know about the famous double-stranded structure of DNA, what is much less common knowledge is how DNA is stored inside of our cells. Think about it… a single cell in your body has so much DNA that if you laid it out, it would be almost 2 meters (or 6 and a half feet) long! In a flash back to biology class, you may remember that DNA is stored in 23 pairs of chromosomes in each cell which look like big fuzzy ‘X’s. And as it turns out, each of those chromosomes is really made up of DNA wrapped around lots of big blocky proteins called histones, almost like string wound around a bunch of yo-yos and stacked next to each other.

When a cell wants to read a gene from the DNA to make a new protein, it uses different mechanisms to partially unwind those histone yo-yos.This type of control (opening and closing access to DNA without changing the DNA itself) is called epigenetics. It helps cells decide which genes to use and when. This is especially important in the brain, where neurons need to turn on certain genes for learning, communication, and memory. Epigenetics is a very hot field of research because when this regulation goes wrong, cells may activate the wrong genes or fail to activate the right ones, which can contribute to neurological disease.

Emily Feierman, a recent NGG graduate, and her lab wanted to know how epigenetics plays a role in the brain – specifically how different histones can modify how tightly packed the DNA is in neurons across the brain and whether or not this can affect thinking and memory. To do this, she looked at a newly discovered component of the ‘yo-yos’, a histone protein called H2BE.

Before we talk more about H2BE we have to know a bit more about histones. Each ‘yo-yo’ is made up of four different histones which are usually standard. These are called H2A, H2B, H3, and H4. But it turns out, your cells can actually substitute in some less common histones to loosen up the DNA and allow different genes to be expressed. Each of the 4 standard histones can come in those less common flavors. While previous studies had found these flavor variants in the brain for H2A and H3, nobody had yet found any widely present flavors of the standard H2B histone building block.

Emily and her team were interested in ‘E’ variant of H2B specifically because over a decade ago H2BE had been shown to be highly present in neurons of the olfactory cortex of the brain, the area responsible for your ability to smell, but it wasn’t clear at the time whether or not it was present in the rest of the brain or what exactly it was doing.

To remedy this, Emily developed a new way to mark the presence of H2BE in neurons and found that instead of existing only in the olfactory area, it can actually be found in neurons all across the cortex of the brain. This suggests that it plays an important and more general role in supporting neurons since it’s found everywhere across the brain instead of just one area. She also found that H2BE loosens up the DNA strands more than normal H2B, so that genes can be more easily read out for protein production. But what proteins does H2BE help free up for production you may ask?

To answer this question, Emily used a mouse model gene knockout (KO) of H2BE, meaning the mice could not produce H2BE, and harvested neurons from their brains and compared the range of genes turned on in these mice relative to normal mice. She found that neurons from H2BE KO mice had changes in genes related to the functioning of neuronal synapses, the sites of contact between neurons that allow them to communicate.

One thing to know about synapses is that they are complicated button-like structures on the branches of neurons that require a lot of maintenance. Like little machines, they require scaffolding to build and molecular gizmos to make work, which requires a lot of different proteins to be made and therefore genes to be active. So any changes in synaptic gene expression in the H2BE KO mice might lead to impaired synaptic functions. When Emily tested this, this is exactly what she found - neurons from H2BE KO mice had reduced synaptic responses to electrical inputs.

It is also well known that the ‘strength’ of synapses and their density in neurons can play a role in memory formation. To test if H2BE plays a role in memory, Emily subjected the H2BE KO mice that have the deficits in synaptic functioning to different behavioral and memory tasks. While they didn’t seem to have any problems with their sense of smell, social activity, or anxiety, they did have reduced long term memory!

So it seems that by epigenetically controlling the looseness of DNA at key sites related to proteins used for synaptic functioning, the histone H2BE variant plays an important role in maintaining neuronal health and memory formation. Emily’s work is the first to fully characterize the role of this histone variant in brain functioning, and it opens up new insights into how gene expression is controlled in neurons to ultimately contribute to behavior, and how it could go wrong in aging and disease.

About the brief writer: Joe Stucynski

Joe is a graduate student in Dr. Franz Weber’s and Dr. Shinjae Chung’s labs at Penn. He is interested in how the immune system influences sleep regulation during sickness via interoceptive pathways.

Want to learn more about H2BE and epigenetics? You can find Emily’s full paper here!

How psychedelics remap the brain to help overcome traumatic fear

or technically,

Psilocybin-enhanced fear extinction linked to bidirectional modulation of cortical ensembles

[See original abstract on Pubmed]

Sophie A. Rogers was the lead author on this study. Sophie is a sixth-year PhD candidate investigating the impact of psychedelics on cortical computations underlying persistent fear and pain in Dr. Gregory Corder's laboratory at the University of Pennsylvania. Sophie's thesis work has been published in Nature Neuroscience, accepted in Nature, and was awarded an F31 training grant from the National Institute of Neurological Disorders and Stroke. In 2020, Sophie graduated from the University of Chicago with an Honors B.S. in Neuroscience, after completing an undergraduate thesis in the laboratory of Dr. Ming Xu. In 2026, she will join the laboratory of Dr. Maria Geffen at UPenn as a postdoctoral fellow studying the effect of psilocybin on auditory predictive processing in mouse models of autism spectrum disorder. In the future, Sophie hopes to become an independent academic researcher using large-scale neural recordings and computational techniques to understand how psychedelics alter mood, motivation, and decision-making across disease models.

or technically,

Psilocybin-enhanced fear extinction linked to bidirectional modulation of cortical ensembles

[See Original Abstract on Pubmed]

Authors of the study: Sophie A. Rogers, Elizabeth A. Heller & Gregory Corder

According to the World Health Organization, approximately 70% of people worldwide will experience a potentially traumatic event in their lifetime. Of these people, 5.6% will go on to develop post-traumatic stress disorder (PTSD), which is a condition where individuals have persistent, frightening thoughts and memories of the traumatic event. Often, individuals with PTSD experience sleep problems, feel detached or numb, or may be easily startled. Psychedelic drugs are being increasingly researched as a tool to help people overcome mental health conditions like PTSD. One of the most commonly studied drugs, and the focus of today's post, is called psilocybin. Although no large-scale clinical study has investigated psilocybin as a treatment for PTSD, scientists have found that even a single dose of psilocybin can have lasting impact on patients suffering from other neuropsychiatric disorders such as depression, substance use disorder and anxiety. In order to explore the effects of psilocybin on trauma, Sophie Rogers, a former NGG graduate student in Greg Corder’s lab, carried out experiments in mice to identify how psilocybin affects the brain after a traumatic events.

To begin exploring the question of how psilocybin produces its therapeutic effects on the brain, Sophie first needed to choose a way to model a traumatic event in mice. She used a very common experimental approach called trace fear conditioning (TFC). Under this approach, mice undergo a 5-day long experiment (Figure 1). On the first day, called habituation, mice are placed in a box (Box A) and a sound is played. The mice explore the environment and become familiar and comfortable with the box and the sound that is played. On the second day, mice are placed in a new box (Box B) and now, 20 seconds after the sound is played (the same sound from day 1), the mice receive a ~2 second electric shock, which is considered a traumatic event for the mice. On this day of the experiment, called acquisition, the mice learn to associate the sound played and the box environment with a painful memory. On the third, fourth and fifth days, the mice are returned to the original box (Box A) and the same sound is again played but without any shocks delivered. These final three days are called extinction, where the mice slowly learn that the sound no longer predicts the shock.

Figure 1. Illustration of the experimental approach used to investigate the effect of psilocybin on traumatic fear memories. The graphs below each box represent the relative amount of freezing that mice, on average, exhibited on a given day of the protocol.

Throughout the experiment, scientists can measure how afraid of the sound the mice are by measuring the amount of time that the mice spend freezing, defined as the complete absence of movement except for breathing. In this experiment, freezing levels are very low or zero on day 1 (habitation) but after the shock is delivered on day 2 (acquisition), the mice start to freeze for as high as 50% of the time. On day 3, the mice show a very high freezing response to the sound despite being in Box A, rather than Box B, where the shock took place. This demonstrates the fear memory of the mice is generalized to different contexts, which occurs similarly in PTSD patients. Throughout extinction (days 3-5), most mice show a decline in freezing behavior, suggesting that they are beginning to loosen the association between the sound and the shock. However, their overall freezing levels are still way higher than during the habitation day (before the shock was introduced at all), suggesting that the fear memory is still present.

Sophie next wanted to test whether giving mice the psilocybin drug directly after acquisition (day 2) affected how they responded during the extinction (days 3-5). If psilocybin helps in overcoming the traumatic memory, we would expect that psilocybin-treated mice would show even lower freezing on the extinction days than those that were not given psilocybin (control). Interestingly, Sophie found some mice were greatly benefited by the psilocybin, showing a large reduction in freezing compared to the control mice, but not all the mice that received psilocybin after acquisition responded this way. This suggests that there is significant individual variability when it comes to psilocybin’s effect on treating traumatic events. Nevertheless, Sopie wanted to take a closer look at the brains of the mice to determine if something was different between the group of mice that were benefitted by psilocybin versus the group that was not benefitted, along with the control group.

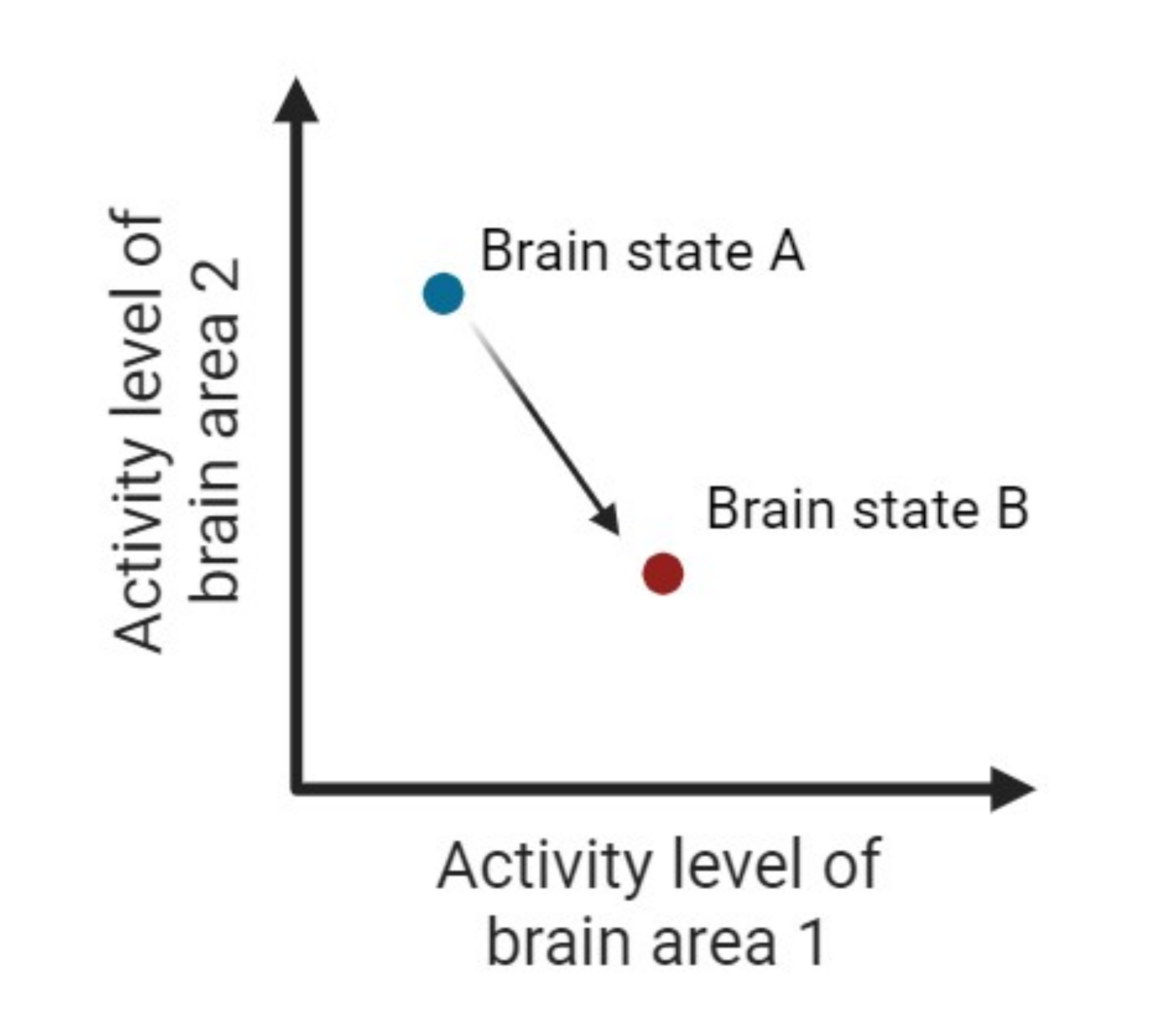

In order to determine how the psilocybin affected the brains of these mice after trace fear conditioning (TFC), Sophie measured the “activity” of single brain cells, or neurons, in an area called the retrosplenial cortex (RSC). Some neurons become active in response to things in our environment that they represent, for example a “sound neuron” might be activated by a sound being played. Other neurons become active in response to more internal things, like fear and memories. Sophie chose to focus on RSC because the neurons in the area are involved in storing and retrieving memories of lived experiences and because these neurons are involved in extinguishing fear memories. Sophie measured the activity of individual neurons in this brain area across the 5 days of trace fear conditioning (TFC). She found that in control mice, there was a group of neurons in the RSC that were strongly activated after the acquisition of the fear memory (day 2). During extinction (days 3-5) and while the control mice were freezing, this group of ‘fear neurons’ was highly active and did not vary much. This suggests that the fear neurons in RSC were inflexible while the mice remained focused on the strong association formed between the sound and the shock, even in the absence of the shock. When Sophie looked at the RSC neurons of mice that were given psilocybin and showed reduced freezing, she found that the group of fear neurons were less active during freezing and instead became more active in response to other things, like walking around. In other words, the neurons in the psilocybin mice were more flexible and able to dissociate from the original fear response. So Sophie found that psilocybin helps overcome traumatic experiences like TFC by allowing the brain to be more flexible, which enables therapeutic-like responses.

Taken together, Sophie’s results offer strong motivation for future studies of psilocybin on the treatment of neuropsychiatric disorders, like PTSD. Her work in mice shows us that psilocybin has a powerful effect on the brain’s ability to process and overcome traumatic events by changing how our brain responds to things associated with bad experiences. Furthermore, her finding that the effects of psilocybin can vary between individual mice leaves open the question of why some people respond differently to the same treatment. One possibility is that the mice that weren’t helped by psilocybin were already “behaviorally rigid” meaning that they were already prone to repetitive, inflexible behaviors. This may correspond to a person who suffers from PTSD, in addition to other neuropsychiatric disorders, like obsessive compulsive disorder, for example. It may be the case, therefore, that patients with multiple or overlapping disorders would not benefit from psilocybin treatment. Nevertheless, Sophie’s work provides invaluable insight into how psilocybin could be used as a therapeutic drug for the treatment of neuropsychiatric disorders, like PTSD.

About the brief writer: Jafar Bhatti

Jafar Bhatti is a PhD Candidate in the lab of Dr. Long Ding / Dr. Josh Gold. He is broadly interested in brain systems involved in sensory decision-making.

Want to dive deeper into how psilocybin affects the brain after trauma?

Check out Sophie’s full research paper here.

Changes in how brain regions “talk” to each other from childhood to adulthood follow and support a fundamental organizational pattern of the brain

or technically,

Functional connectivity development along the sensorimotor-association axis enhances the cortical hierarchy

[See original abstract on Pubmed]

Audrey Luo was the lead author on this study. Audrey is a 7th year MD/PhD student fascinated by brain development, which happens to be the topic of her graduate work with Ted Satterthwaite. Having recently defended her PhD, Audrey is heading back to the wards to finish her medical degree. She plans to pursue psychiatry and hopes to combine her research with clinical practice to better understand the human brain and treat mental illness.

or technically,

Functional connectivity development along the sensorimotor-association axis enhances the cortical hierarchy

[See Original Abstract on Pubmed]

Authors of the study: Audrey Luo, Valerie Sydnor, Adam Pines, Bart Larsen, Aaron Alexander-Bloch, Matthew Cieslak, Sydney Covitz, Andrew Chen, Nathalia Bianchini Esper, Eric Feczko, Alexandre Franco, Raquel Gur, Ruben Gur, Audrey Houghton, Fengling Hu, Arielle Keller, Gregory Kiar, Kahini Mehta, Giovanni Salum, Tinashe Tapera, Ting Xu, Chenying Zhao, Taylor Salo, Damien Fair, Russel Shinohara, Michael Milham, Ted Satterthwaite

The human brain supports a wide range of functions. Different regions of the outermost layer of the brain, the cortical surface, support two categories of functions—sensorimotor regions are involved in sensation and movement and association regions are involved in functions like self-reflection and emotion.

However, these different cortical regions can be distinguished from each other further beyond these two broad categories. We can use a framework known as the sensorimotor-association (S-A) axis. Instead of just categorizing each region as either a sensorimotor or association region, the S-A axis ranks the different brain regions from a sensorimotor end to an association end. Think of this as the difference between simply categorizing people as either “Gen Z” or “Millenial” versus ranking them from youngest to oldest— the ranking method is much more useful for meaningfully distinguishing between people!

Previous studies have shown that the S-A axis summarizes how many characteristics, including the brain’s physical structure, blood flow, and cell type composition, differ across the cortical surface. Because of the prevalence of this cortical surface pattern across so many biological brain measures, neuroscientists believe that the S-A axis is essential to the human brain’s ability to carry out its wide variety of functions.

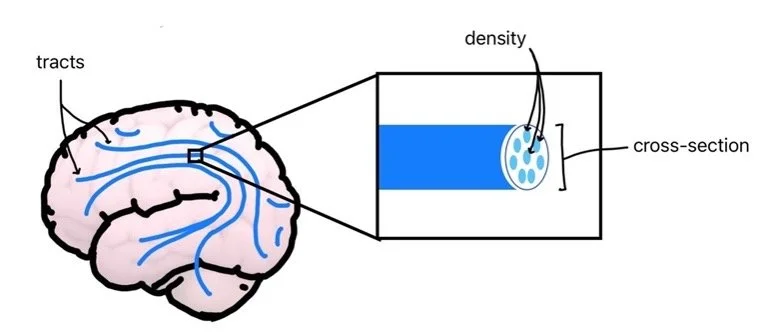

In this paper, NGG student Audrey Luo and her colleagues in the Satterthwaite lab were interested in how different parts of the brain communicate with each other during the transition from childhood to adulthood. Imagine each brain region has a nonstop video call link that any other region can join at any time. When two regions’ activities are synchronized, it’s as if they have connected on video, communicating while their “screens” are in sync—neuroscientists call that functional connectivity (FC). Audrey wanted to know how the change in functional connections between different cortical regions during development related to the S-A axis. To do this, Audrey used data from participants ages 5 to 23 scanned with functional magnetic resonance imaging (fMRI). fMRI is an imaging research tool that can tell us when different areas of the brain are getting oxygen-rich blood, which roughly reflects which parts of the brain are activated.

Because Audrey included 200 cortical regions in her analysis, there could be an astonishing 19,900 possible FC pairs, or 19,900 “video calls”. To summarize across all these connections, Audrey gave each region a single score called FC strength—the average of a region’s connectivity to all the rest of the cortical regions. A high score means the region’s activity tends to stay in sync with many other regions (e.g., long calls with many partners, shared screen, very social); a low score means its activity tends to differ from other regions (e.g., short calls with few partners, distinct screen, less social). With this one-number summary of FC strength, Audrey tracked how each region’s connectivity to the rest of the brain changed from childhood to adulthood to ask the question: how is FC strength development across the cortical surface related to the S-A axis?

What Audrey found was that as children grew older, sensorimotor regions showed increased connectivity and association regions showed decreased connectivity. More specifically, Audrey showed that the pattern of change in FC strength of each brain region was directly related to its S-A axis rank: the closer the brain region was to the “sensorimotor end” of the axis, the more likely it was to increase in connectivity (i.e., more social), and the closer the brain region was to the “association end” of the axis, the more likely it was to decrease in connectivity (i.e., less social). In other words, functional connections don’t develop uniformly across the cortical surface. Instead, they follow a specific pattern that matches the fundamental organizational framework of the S-A axis.

To further understand how functional connectivity during development related to the S-A axis, Audrey asked whether the change in FC between a pair of regions depends on their identities as either sensorimotor or association regions. Bringing back the video call analogy, we can think of sensorimotor and association regions as two friend groups. Essentially, Audrey is asking—how does video call frequency between and within the two friend groups change over development? What she found was that as children grew into adults, connectivity between sensorimotor regions increased, connectivity between association regions decreased, and connectivity between association and sensorimotor regions also decreased. This paints a more specific picture of how functional connectivity develops: sensorimotor regions become more social (but just with each other), while association areas become more independent and specialized.

Overall, this work suggests that the S-A axis is not only a framework of how the brain is organized in adulthood, but also how the brain develops from childhood onward. Audrey argues that this specific pattern of changes in how sensorimotor and association areas communicate ultimately helps establish the S-A axis as the dominant cortical framework of the brain, enabling the vast diversity of functions observed in adulthood.

Perhaps the most impressive aspect of the paper is it reports the same results from not just one dataset, but four large datasets that were collected from different sites and in different ways. Indeed, some of Audrey’s findings are replications of results found in a paper by a graduated NGG student, Adam Pines: https://www.upennglia.com/briefs/bib-personalized-brain-networks— an impressive study conducted in one large dataset. Now, with Audrey’s rigorous work in four large datasets, we can have even more confidence as a scientific community that these findings on the S-A axis are not just dependent on the specific circumstances of a particular sample, but instead, capture something real about human biology. This is a concept known as generalizability—the extent to which findings can be applied to the entire population beyond a specific sample. This study is an excellent example of generalizable science and reveals a rigorous framework of functional brain development. Hats off to Audrey and the rest of the team!

About the brief writer: Kevin Sun

Kevin is co-mentored by Drs. Aaron Alexander-Bloch and Ted Satterthwaite, working with functional neuroimaging data to ask questions about development, genetics, and transdiagnostic psychopathology risk. Outside of research, Kevin enjoys film, weightlifting, art museums, and speculative fiction.

If you’d like to read more, see the original paper here!

Not all neurons shake during seizures

or technically,

Ndnf Interneuron Excitability Is Spared in a Mouse Model of Dravet Syndrome

[See original abstract on Pubmed]

Sophie Liebergalls is a 7th MD-PhD student who is mentored by Ethan Goldberg at the Children's Hospital of Philadelphia. She is interested in how different types of neurons in the brain send signals to other neurons along their axons, and how this process may be impaired in diseases like epilepsy. After completing medical school, she plans to do a residency in Pediatric Neurology and try to bring insights from the lab to the care of children with neurologic diseases.

or technically,

Ndnf Interneuron Excitability Is Spared in a Mouse Model of Dravet Syndrome

[See Original Abstract on Pubmed]

Authors of the study: Sophie Liebergall, Ethan Goldberg.

Neurons, the cells in our brains, communicate with each other with electricity. Usually, the amount of electricity is carefully regulated, allowing neurons to pass signals to each other in a controlled way. However, sometimes there is a sudden burst of electricity in the brain, causing a seizure. Many people will experience a single seizure during their lifetime, but people with regular seizures are diagnosed with epilepsy. Epilepsy is a broad category of brain disease that affects up to 50 million people worldwide. Some types of epilepsy can be managed with medication, but others are treatment-resistant and very difficult to control.

Dravet syndrome is one example of a disorder that causes treatment-resistant epilepsy. It’s a rare neurodevelopmental disorder that causes severe, frequent seizures starting in the first year of life, plus intellectual disability and developmental delay. Scientists have figured out what causes this disorder: mutations in a single gene called SCN1A. SCN1A is a gene that contains the instructions to make Nav1.1, one of the brain’s sodium channels. Some types of neurons in the brain rely on Nav1.1 to generate and control their electrical signals. Even though we understand the cause, there is still no cure for Dravet syndrome, and there is no current treatment that can fully manage patients’ symptoms. Scientists are still working to better understand the effects of mutations in SCN1A in neurons, with the hopes that new knowledge could eventually lead to new treatment strategies.

There are many different types of neurons in the brain, and scientists have learned that the gene SCN1A is most highly expressed in a group of neurons called inhibitory neurons. These neurons work by quieting their neighbors, helping to prevent runaway activity in the brain. Because SCN1A is especially important for these inhibitory neurons, scientists think that mutations in this gene disrupt the brain’s electrical balance: when inhibitory neurons can’t do their job, their neighbors can become overactive, leading to seizures.

Sophie Liebergall, a current NGG student, wanted to learn more about whether all inhibitory neurons are affected by SCN1A mutations. Specifically, she looked at a group of inhibitory neurons that express a protein called Ndnf. SCN1A mutations, like those that cause Dravet syndrome, impair the ability of some types of inhibitory neurons to generate electrical signals, but we didn’t know anything about what happens to Ndnf inhibitory neurons. The goal of Sophie’s study was to see whether Ndnf inhibitory neurons are disrupted similarly to other inhibitory neurons when there are errors in the SCN1A gene.

To investigate this question, Sophie used a mouse model of Dravet syndrome, where one of the two copies of Scn1a was genetically removed from all neurons. She then measured the electrical properties and activity of Ndnf inhibitory neurons in these mice, and she compared their properties to Ndnf interneurons in healthy mice with both working copies of Scn1a.

Surprisingly, Sophie found that Ndnf inhibitory neurons seemed totally unaffected by the missing copy of Scn1a -- their electrical properties were similar to those of healthy neurons. This was unexpected because the electrical properties of other types of inhibitory neurons are really different when they’re missing a copy of Scn1a. Sophie also found that these neurons do not seem to depend on the protein encoded by Scn1a to generate their electrical signals, which may explain why their activity was normal.

Sophie’s findings are important because they challenge the simple version of the hypothesis that seizures in Dravet syndrome are caused by a blanket loss of inhibitory neuron function. Instead, Sophie’s study shows that the story is more complex: some inhibitory neurons, like Ndnf neurons, remain fully functional even when Scn1a is missing. These results reshape our understanding of the disease mechanisms of Dravet syndrome and open the door to more targeted approaches in treating the disorder. More broadly, Sophie’s work reminds us to pay attention to the full diversity of neurons. The brain is complex, and it is only by appreciating and dissecting this complexity that we will be able to understand how the brain works and how it goes wrong.

Great work Sophie!

About the brief writer: Lyndsay Hastings

Lyndsay Hastings is a PhD candidate in NGG working in Dr. Tim Machado’s lab. She is interested in how the brain generates flexible and complex movement.

Curious about Sophie’s research? Check out the full paper here!

How Brain Injuries Affect Sleep-Related Neurons Differently in Male and Female Mice

or technically,

Mild Traumatic Brain Injury Affects Orexin/Hypocretin Physiology Differently in Male and Female Mice

[See original abstract on Pubmed]

Rebecca Somach was the lead author on this study in the Cohen lab. Rebecca is currently doing her postdoctoral fellowship at Swarthmore College. Her current research uses planarians as a model system to understand how pesticides affect developmental neurotoxicity. She's interested in helping students understand neurobiology and helping them achieve their research goals.

or technically,

Mild Traumatic Brain Injury Affects Orexin/Hypocretin Physiology Differently in Male and Female Mice

[See Original Abstract on Pubmed]

Authors of the study: Rebecca T Somach, Ian D Jean, Anthony M Farrugia, Akiva S Cohen

A traumatic brain injury (TBI) occurs when an external source, such as a strong hit to the head, damages the brain. TBIs can be mild or severe, with mild TBIs (mTBI) being especially common and accounting for a large portion of TBI cases. Both TBI and mTBI can negatively impact brain physiology and lead to problems like sleep issues. Surprisingly, despite a large portion of TBI patients experiencing disordered sleep, there is very little research focusing on how these injuries may affect neural circuits that regulate sleep. Understanding the link between TBI and disrupted sleep is an important step in improving treatments for TBI patients.

Orexin/hypocretin neurons are specialized brain cells found in a brain region called the Lateral Hypothalamus. These neurons play a critical role in sleep and wake regulation. When they are not working properly or if they die off, it can lead to sleep-related issues, such as narcolepsy (a disorder where people fall asleep unexpectedly) or excessive sleepiness during the day. Some research suggests that TBIs can disrupt orexin, which could explain why many TBI patients have sleep troubles.

However, the Cohen lab found that sleep problems after mTBI can happen even when the number of orexin neurons does not decrease due to the injury. This raised an interesting and important question: is it the activity of these neurons after injury, rather than how many there are overall, that is important? To tackle this question, the Cohen lab looked at how active orexin neurons were after mTBI by labeling them for cFOS, a protein that is produced when neurons are active. They found that instead of orexin neurons dying off, the activity of the overall population was actually reduced (less cFOS in the population), suggesting that the number of neurons alone is not the only factor to consider after TBI. These findings were important, as only two other studies have looked at how orexin neuron activity changes after brain injury.

Rebecca Somach, an NGG graduate from the Cohen lab, decided to further expand on this work by incorporating other fascinating neuroscience techniques. These included electrophysiological recordings, which measure electrical activity in the brain, and a mouse that has orexin neurons tagged with a glowing protein, making it easier to study and see these neurons in the brain. Rebecca also wanted to examine the effects of brain injury might be different in male and female mice, since sleep can vary between sexes. This is particularly important because of the little research on the effects of TBI, specifically mTBI, on orexin in female animals.

Rebecca specifically examined whether mTBI changes how orexin neurons might respond to electrical signals or how often they fire in male and female mice. To do this, they used current clamp recording, a neuroscience technique used to see how neurons react when they are injected with electrical current. In female mice, they found that orexin neurons became more negatively charged after mTBI, which is a process called hyperpolarization. In contrast, they found that in male mice, orexin neurons had a reduction in action potential threshold after mTBI, making it easier for them to fire. These seemingly small changes in how neurons behave after mTBI can have a big impact on how they communicate to other areas of the brain.

While orexin neurons are found in the Lateral Hypothalamus and talk to neurons adjacent to them, they are also connected to many different parts of the brain. Because of this, Rebecca wanted to examine whether mTBI could affect the connections these neurons receive from other brain regions. Orexin neurons receive both excitatory and inhibitory signals. To only focus on excitatory signals, Rebecca recorded neurons while applying a chemical called bicuculline, that blocks inhibitory signals. This allowed them to study how only excitatory signals may have changed after brain injury in male and female mice. They looked at two types of excitatory post-synaptic currents - spontaneous (sEPSC) and miniature (mEPSC), which can be thought of as the orexin neurons’ responses to excitatory inputs from other neurons. In short, the stronger the EPSCs, the more likely the neurons are to become active. After injury, they saw that these signals were less frequent and weaker in both male and female mice, meaning that the orexin neurons were getting less excitatory activity.

Next, Rebecca did the reverse experiment and isolated only the inhibitory signals to examine if there were mTBI-induced changes. She did this by recording orexin neurons with AP5 and CNQX, which are chemicals that remove excitatory currents. Rather than looking at sEPSCs and mESPSCs, she then focused on recording spontaneous and miniature inhibitory currents (sIPSC, mIPSC) in male and female mice. After injury, she saw reduced time between sIPSCs, meaning they occurred more often. Interestingly, she only saw stronger sIPSCs and mIPSCs in female mice, which could help explain why sleep disturbances after TBI can vary depending on sex.

Overall, while previous research has shown an effect of mTBIs on orexin neurons, not many studies looked at how brain injury impacts the activity of these neurons. Rebecca addressed this gap by exploring how the activity and connections of orexin neurons are altered after brain injury in both males and females. She shows that mTBI significantly alters how orexin neurons respond to inputs from other neurons and that although these changes appear similar between male and female mice, the underlying mechanisms are not the same. Ultimately, her work provides insight into how brain injury may disrupt sleep-related circuits and informs us why TBI patients may experience disordered sleep.

About the brief writer: Diana Pham

Diana is a Neuroscience Ph.D. student in Dr. Brett Foster’s lab. She is broadly interested in how memories are encoded and consolidated in the brain.

Want to learn more about the effects of brain injury on sleep-related neurons? You can find Rebecca’s full paper here!

How does the brain transform our memories during sleep?

Or technically,

Memory reactivation during sleep does not act holistically on object memory

About the author: Liz Siefert

Liz is a 4th year PhD candidate working with Dr. Anna Schapiro and Dr. Brett Foster. She is interested in how our memories change and shift overtime, and the role of arousal states (wake, sleep) in these changes.

or technically,

Memory reactivation during sleep does not act holistically on object memory

[See Original Abstract on Pubmed]

Authors of the study: Elizabeth M. Siefert, Sindhuja Uppuluri, Jianing Mu, Marlie C. Tandoc, James W. Antony, Anna C. Schapiro

Whether you’re a human, dog, or fruit fly, we all sleep. There’s no doubt that sleep is essential, but why we sleep is still hotly debated. Many ideas have centered around the observation that sleep is important for strengthening key memories and putting them into storage. Neuroscientists believe this happens during sleep when the brain replays memories to strengthen them, a process called memory reactivation. Supporting this idea, numerous studies have shown that people tend to do better if they take even a short nap between learning something and being tested on it.

NGG student Elizabeth Siefert was fascinated by the process of memory reactivation during sleep, but wanted to better understand what impact it has on our memories. She noticed that sleep can’t just be improving memory overall, because that’s not how memory works. “As we go about our lives, our memories don’t always continue to improve,” says Siefert. “Often, they get worse, or they change in different ways. So really, across time, memory isn’t just improving, but it’s transforming.” Following on these observations, Siefert designed an experiment to understand whether sleep simply improves memory or rather has the power to transform it by strengthening some aspects of a memory while allowing others to fade.

Studying the sleeping brain

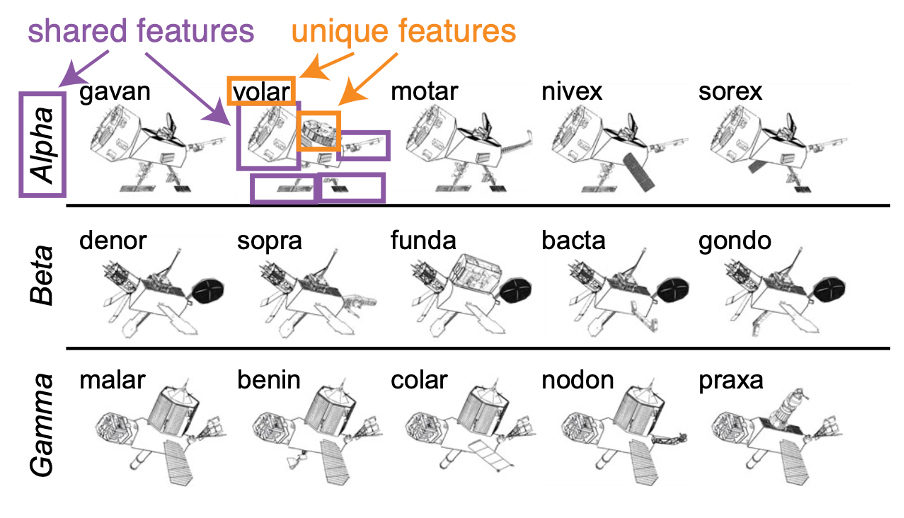

To probe the nature of memory transformations during sleep, Siefert and her team needed a memory test that allowed them to assess different aspects of memory. “Memories have lots of different features, so we wanted to know if memory reactivation has the power to act on different features of our memories in different ways,” says Siefert. She did this by asking participants to learn the identities of several satellites belonging to three groups. The satellites were created by mixing different parts so that the team could control the different features of a memory (Figure 1). Some shared features appeared on multiple satellites within a group, but never on satellites in other groups. Other unique features were specific to just one satellite, allowing the participants to identify it by name. This allowed the team to look at how representations of the individual versus the shared features were transformed in memory during sleep. Siefert asked the participants to learn the satellite names and groups, had them take a nap, and then tested how well they remembered the unique versus shared satellite features.

Just because participants took a nap doesn’t mean they were necessarily going to replay their memories of the satellites. That’s why Siefert stepped in to nudge their sleeping brains to replay the memories she was interested in. She did this using a method called targeted memory reactivation (TMR). During TMR, experimenters measure a participant’s brain activity while they sleep and look for moments when the sleeping brain is most likely to replay a memory. When those moments are identified, the experimenter plays a cue to remind them of a recent memory and encourage the brain to replay that specific memory. The cues are played softly enough that they don’t wake up the participant, and participants don’t know that the cues are being played, so any impacts of TMR are unconscious. “The best method we know in humans for studying memory reactivation in sleep is TMR,” says Siefert. “Our sleeping brain is already prioritizing certain information, and what we’re doing with TMR is biasing the brain to prioritize what we want it to.” In Siefert’s experiment, she played the name of some of the satellites the participant had just learned to encourage reactivation of those memories.

Figure 1. Satellites used in the memory task. Satellites belonged to three groups: alpha, beta, or gamma. The purple and orange boxes highlight the shared and unique features of the satellite volar. The purple boxes show volar’s features that are shared with other satellites in the alpha category, while the orange boxes show the features that are unique to volar.

Clarifying the relationship between sleep and memory

With all the pieces in place, Siefert was ready to ask how memory replay during sleep impacted different features of memory. Before the nap, participants tended to do a better job learning the satellites’ unique features compared to their shared features. In other words, they were better able to identify that a particular feature belonged to an individual satellite than that a particular feature was shared by satellites of a certain group. After a nap with TMR, that divide only widened. Siefert found that TMR increased participants’ memory for unique features while decreasing their memory for shared features. For satellites whose names were not used as cues during sleep, there was no impact on memory. This demonstrates that the effects were likely due to the reactivation encouraged by TMR. “That showed us that memory reactivation during sleep, it’s not just wholistically improving our memory, it has the power to act on specific features of our memory in very specific ways,” says Siefert. “It [can] even impact different features of our memory in different ways, such as improving some even at the cost of others.”

Why were certain features strengthened while others got weaker with reactivation? One possibility is that the cues used to trigger replay during sleep were the satellite names. This may have encouraged the brain to prioritize information about the individuals’ identity rather than their group membership. Future studies could use the group names as a cue instead and see if that nudges the brain to prioritize shared over unique features. Another possibility is the fact that people already tended to learn the unique features better before the nap. That prioritization may have carried over into their sleeping brains. “Your learning strategy and goals before sleep might bias what type of information the sleeping brain wants to reactivate,” says Siefert. “It’s possible that it was those learning strategies that led that information to be benefitted more by sleep.” Importantly, no matter what the explanation, it’s not the case that unique features will always be remembered over shared features following a night of sleep. Instead, a complex combination of learning goals and strategies likely shape exactly how memories are transformed during sleep.

Unbeknownst to the participants, Siefert was also testing how the way she presented the cues during sleep impacted memory. Sometimes she played the same satellite name over and over in a block, and other times she played satellite names intermixed with each other. Importantly, she always played the satellite names the same number of times during sleep, only changing in what order she played them. While both methods led to improved memory for unique over shared features, repeating the same satellite name many times in a row was more effective in transforming memory than playing the names in a random order. Siefert suggests that this may be evidence that reactivating memories in blocks helped the brain differentiate things in memory more than random reactivations.

The lab is already extending their results to understand more about the relationship between sleep and memory. Specifically, they’re interested in how sleep may help us take new information and incorporate it into older memories. “A study that we’ve run in the lab since [mine] is trying to understand what it looks like to learn new information that is aligned with things that you know from the past and how that new information can become integrated into older memories without totally overwhelming those old memories,” says Siefert. It seems that we’re only scratching the surface of what TMR can teach us about the sleeping brain’s relationship to memory.

What does this mean for everyday life?

After learning about these results, you might wonder whether students should give up on wakeful studying and just play their textbooks while they sleep. Unfortunately, it’s still not that easy. “Because [memory reactivation] doesn’t just improve memory and has this transformation component, if you took this device home and used it to play a textbook it’s not clear to me whether it would improve or hurt your memory of that information,” says Siefert. “You might need specific learning goals, you would need to think carefully about when you are delivering the cue, and you need to consider lots of other things.” It may be possible to design a system that could help a student study in their sleep, but we still need to learn a lot more about how TMR works before that will be possible.

Despite its complicated nature, some scientists are optimistic that they may be able to bring TMR to your bedroom. Rather than recording brain activity to target specific moments for reactivation, they aim to use audio recordings of movement during sleep to target the longer periods of sleep when they think replay naturally occurs. “For me in the lab it’s really important to target specific moments so I really know what my cues are doing, but in the real world that might be less important,” says Siefert. TMR is already being used in some clinical settings to help stroke patients relearn how to move their bodies, and Siefert suggested that it could one day be helpful for things like language learning if you’ve already learned some of the basics. For those who may be worried about TMR being used for brain washing or unconscious influence, Siefert says we aren’t capable of that now and may never be. “Your brain is already doing things and we’re just biasing it to little quick moments,” says Siefert. “We aren’t at the point where we can totally change the way that you’re thinking about something.”

Even in the absence of a TMR system on your nightstand, one takeaway is clear: sleep is important. “We don’t have a good understanding of why we’re remembering and forgetting certain things but knowing that the brain is selecting information means that that selection is probably important, and that selection is clearly happening during sleep,” says Siefert. “That means you should be getting good sleep, because you want to allow your brain time to process the information.” So next time you’re faced with the decision to stay up a little later studying for a test or prepping for a meeting, remember that choosing to sleep may be even more important than putting in that extra half hour of work.

About the brief writer: Catrina Hacker

Catrina Hacker is a PhD candidate working in Dr. Nicole Rust’s Lab. She is broadly interested in the neural correlates of cognitive processes and is currently studying how we remember what we see.

Learn more about the team’s memory reactivation study in the original paper.

A NEW APPROACH TO IMAGING THE BRAIN DURING EARLY-STAGE NEURODEGENERATION

or technically,

Positron Emission Tomography with [18F]ROStrace Reveals Progressive Elevations in Oxidative Stress in a Mouse Model of Alpha-Synucleinopathy

[See original abstract on Pubmed]

Evan Gallagher is a recent graduate of Penn’s neuroscience graduate program, and the lead author on this study. He is broadly interested in using neuroimaging approaches like magnetic resonance imaging (MRI) and positron emission tomography (PET) to study complex biological processes in living animals and people. Ultimately, he hopes that his work allows us to better understand—and eventually treat—major neurological disorders like Alzheimer’s and Parkinson’s.

or technically,

Positron emission tomography with [18F]ROStrace reveals progressive elevations in oxidative stress in a mouse model of alpha-synucleinopathy

[See Original Abstract on Pubmed]

Authors of the study: Evan Gallagher, Catherine Hou, Yi Zhu, Chia-Ju Hsieh, Hsiaoju Lee, Shihong Li, Kuiying Xu, Patrick Henderson, Rea Chroneos, Malkah Sheldon, Shaipreeah Riley, Kelvin C. Luk, Robert H. Mach and Meagan J. McManus

Neurodegenerative diseases, like Alzheimer’s and Parkinson’s, impact 15% of the world’s population [1]. This percentage has climbed considerably over the last 30 years and is expected to continue rising as the global population ages [2]. Still, the process of diagnosing (and therefore treating) neurodegenerative diseases remains quite challenging. The difficulty arises because brain changes that play a central role in the disease process begin years or even decades before people experience symptoms [3]. In other words, by the time patients notice physical changes (e.g., memory loss, difficulties with movement, etc.), their brain is already dramatically and permanently affected.

What if we had some way to detect and visualize very early neurodegeneration-related brain changes? Doctors could then identify people at risk for developing neurodegenerative diseases and intervene with treatments before things escalate beyond repair. This is the vision that inspired recent work by Neuroscience Graduate Group (NGG) alum Dr. Evan Gallagher in collaboration with the labs of Dr. Robert Mach and Dr. Meagan McManus at the University of Pennsylvania.

In order to find early signs of trouble, the team needed something that serves as a “red flag” for neurodegeneration as well as a way to find and measure it in the living brain. They landed on reactive oxygen species (ROS), which are molecules that exist naturally in the body, but that can lead to problems if they build up over time. “Excessive production of reactive oxygen species is an important, central process in many neurodegenerative diseases,” explains Dr. Gallagher. “It’s also a very early process. This means that if we’re able to detect and quantify reactive oxygen species in the brain, we may be able to identify that there’s a problem much earlier than we are currently doing.” To find and label reactive oxygen species in the brain, chemists in Dr. Bob Mach’s lab engineered a chemical called ROStrace [4]. ROStrace is a positron emission tomography (PET) imaging radiotracer. When injected into the body, ROStrace travels through the bloodstream and into the brain where it targets and tags reactive oxygen species, giving off a radioactive signal that can be detected. This means that researchers can perform a ROStrace PET imaging scan and get out an image of the brain that looks and works a lot like a heatmap with the color and pattern of the image relaying information about the number of reactive oxygen species present across brain regions. With these PET imaging heatmaps, researchers are able to find “hot spots” of highly-concentrated reactive oxygen species, which could be indicators of places in the brain where the groundwork for neurodegeneration is being laid.

To validate that their ROStrace technology works and prove that it can be used to detect early signs of neurodegeneration, the Mach and McManus labs performed a series of well-controlled experiments in a mouse model. In particular, they used a mouse model with a mutated form of the human alpha synuclein protein. The mutation causes alpha synuclein to accumulate as mice age, the same process that happens in a number of human neurodegenerative diseases. Dr. Gallagher performed ROStrace PET imaging on these animals when they were either 6 or 12 months old (middle and old age for this type of mouse) and compared the results to healthy mice in the same age groups. He found that the mutant alpha synuclein mice had higher levels of reactive oxygen species than the healthy animals at both timepoints. However, it wasn’t just the overall counts of reactive oxygen species that differed. Looking at the ROStrace brain images, Dr. Gallagher could identify a clear spatial pattern with which reactive oxygen species spread across the brain over time in the mutant alpha synuclein animals. It was the same pattern he found when he took brain tissue from mutant animals and stained it with special chemicals to label reactive oxygen species. In other words, it seemed like his PET scan brain images matched the reality of reactive oxygen spread as seen in mouse brains under the microscope. ROStrace worked! “It’s really exciting,” says Dr. Gallagher. “The fact that our PET images matched up with what we found in tissue means we actually have a way of detecting reactive oxygen species in the brains of living animals.”

Taking things one step further, Dr. Gallagher wanted to verify that the ROStrace signal was highlighting something biologically and clinically useful. That is to say, he wanted to confirm that the reactive oxygen species seen on ROStrace brain images were actually related to alpha synuclein disease pathology. Using brain tissue from the same mutant mouse species, this time he stained it with chemicals to mark reactive oxygen species and alpha synuclein proteins. Dr. Gallagher found that the two chemicals labeled many of the same brain cells and brain regions, suggesting that reactive oxygen species were, in fact, associated with alpha synuclein pathology. Remarkably, the co-occurrence of reactive oxygen species and alpha synuclein in the brain was detectable months before mice showed severe behavioral symptoms. This implies that the ROStrace signal could one day be used to diagnose -- or even to preemptively screen for -- alpha synuclein-related brain diseases, like Parkinson’s or Alzheimer’s, long before patients realize something is wrong.

ROStrace could also have applications beyond serving as a marker for alpha synuclein accumulation and neurodegeneration. “As far as we can tell, just about everything ‘bad’ happening in the body is associated with reactive oxygen species,” offers Dr. Gallagher. That means that the methods used in this study should be generalizable across many other disorders. This generalizability, however, does come with a downside. “One bad thing that could be happening in your body is alpha synuclein aggregation, but that’s certainly not going to be the only bad thing happening in your body at any given time,” Dr. Gallagher continues. “For instance, a stressful event or a bad night of sleep can cause increased levels of reactive oxygen species. ROStrace can pick up on that and it makes interpreting a single scan really tricky.” In the current study, Dr. Gallagher navigated this limitation by using scans from many mice to find differences between sick and healthy groups. However, being able to get interpretable information from one single scan is critically important when we think about using technologies, like ROStrace, in the clinic. So, while this study represents an exciting first step, for now research with ROStrace has been limited to lab animals and there are still hurdles to clear before human subjects are part of the picture.

About the brief writer: Kara McGaughey

Kara is a PhD candidate in Josh Gold’s lab studying how we make decisions in the face of uncertainty and instability. Combining electrophysiology and computational modeling, she’s investigating the neural mechanisms that may underlie this adaptive behavior.

Citations:

Van Schependom, J., & D’haeseleer, M. (2023). Advances in Neurodegenerative Diseases. Journal of Clinical Medicine, 12(5), 1709. https://doi.org/10.3390/jcm12051709

Brown, R. C., Lockwood, A. H., & Sonawane, B. R. (2005). Neurodegenerative Diseases: An Overview of Environmental Risk Factors. Environmental Health Perspectives, 113(9), 1250–1256. https://doi.org/10.1289/ehp.7567

Gallagher, E., Hou, C., Zhu, Y., Hsieh, C.-J., Lee, H., Li, S., Xu, K., Henderson, P., Chroneos, R., Sheldon, M., Riley, S., Luk, K. C., Mach, R. H., & McManus, M. J. (2024). Positron Emission Tomography with [18F]ROStrace Reveals Progressive Elevations in Oxidative Stress in a Mouse Model of Alpha-Synucleinopathy. International Journal of Molecular Sciences, 25(9), 4943. https://doi.org/10.3390/ijms25094943

Hou, C., Hsieh, C.-J., Li, S., Lee, H., Graham, T. J., Xu, K., Weng, C.-C., Doot, R. K., Chu, W., Chakraborty, S. K., Dugan, L. L., Mintun, M. A., & Mach, R. H. (2018). Development of a Positron Emission Tomography Radiotracer for Imaging Elevated Levels of Superoxide in Neuroinflammation. ACS Chemical Neuroscience, 9(3), 578–586. https://doi.org/10.1021/acschemneuro.7b00385

Interested in reading more about ROStrace? You can check out Evan’s paper Here!

How genes influence social behavior in animals

or technically,

Conserved autism-associated genes tune social feeding behavior in C. elegans

[See original abstract on Pubmed]

Mara Cowen was the lead author on this study. Mara was co-mentored by Dr. Mike Hart and Dr. David Raizen and researched the effect of mutations in the autism-related gene, Neurexin, on aggregation, stress response, sleep, and neuronal morphology in C. elegans as part of the Autism Spectrum Program of Excellence (ASPE). When not in the lab, Mara can be found traveling, baking, binge-watching Netflix, and going to breweries with her rescue Australian Shepherd, Crispr!

or technically,

Conserved autism-associated genes tune social feeding behavior in C. elegans

[See Original Abstract on Pubmed]

Authors of the study: Mara H. Cowen, Dustin Haskell, Kristi Zoga, Kirthi C. Reddy, Sreekanth H. Chalasani & Michael P. Hart

Most people, including myself, love having lunch with their friends. This kind of behavior is known as social feeding and is common across many animals including most species of birds, fish, mammals, and even insects. Social feeding, in addition to other behaviors that animals exhibit, is controlled by the nervous system which is made up of nerve cells called neurons. Despite the fact that different animal species have different nervous systems, social feeding occurs almost universally across the animal kingdom. This begs the question: what in the nervous system makes it such that animals prefer social feeding as compared to eating alone? Mara, a former NGG graduate student, and her lab, thought that perhaps this was due to changes in an individual’s genes - the blueprints for how the body should grow, develop, and function.

To explore this question of how genes might influence social feeding, Mara studied the behavior of an animal called C. elegans, which is a worm-like animal about the size of a grain of sand. Despite their small size and relatively simple nervous system, these worms can perform many different social behaviors, including social feeding. Social worms tend to clump up together, or aggregate, while eating. In addition to exhibiting the behavior that Mara is interested in, C. elegans are commonly used in labs because experimenters have very precise control over the genes that the worms express. Worms that express a particular set of genes are referred to as a strain. This makes C. elegans the perfect animal to study how genes influence social feeding behavior.

Mara began her study using two worm strains that show opposite social feeding behaviors - one strain that shows consistent social feeding, the social control strain (Figure 1A), and one strain that prefers to eat alone, the solitary control strain (Figure 1D). For both strains, she counted the number of worms that aggregated and the number of worms that did not. She found that 78% of the social control worms ate together, whereas only 4% of the solitary control worms ate together.

Figure 1. The effect of different genes on social feeding behavior in C. elegans.

Now that she has these two worm strains as reference points, Mara’s main goal was to understand how different genes affected social feeding behavior. However, most animals, including C. elegans, have tens of thousands of genes - how was Mara to know which genes would be involved in social feeding behavior? This is where she and her lab used insight from past studies of human social conditions, such as autism. Recent work studying autism found that the neurexin gene and the neuroligin gene were highly associated with autism. Traditionally, these two genes were known to only be involved in allowing neurons to connect with one another. More recent work exploring the function of these genes suggests that they also play a role in controlling social behavior. Therefore, Mara asked whether the neurexin and neuroligin genes could also be playing a role in social feeding in C. elegans and, if so, how. Indeed, when Mara disrupted the neuroligin or the neurexin gene in the social control strain, the worms displayed significantly less social feeding behavior (Figure 1B). Interestingly, if you disrupt both the neuroligin gene and the neurexin gene, the worms show even less social feeding behavior than if you disrupted just one of them (Figure 1C).

Next, Mara and her group wanted to take a closer look at where exactly the neurexin and neuroligin genes were acting to support social feeding behavior. Based on previous work, they knew that a few select neurons are particularly important in social feeding. Interestingly, Mara found that these neurons express the neurexin and neuroligin genes and that expressing neurexin in just two of these neurons restored social feeding behavior. Further, Mara wanted to know how neuronal communication was affected in worms that lacked these genes. She found that in social worms without neurexin there were fewer points of connections, known as synaptic puncta. Additionally, these neurons release a chemical called glutamate which is known to be very important for neurons to signal to one another. Mara and her group found that more glutamate was released from one particular social feeding neuron in the social control group and less glutamate was released from that same neuron in the solitary control group. Mara’s work suggests that increased neural communication, be that number of synaptic puncta or amount of glutamate, was associated with increased social feeding behavior. Therefore, this work provides insight into how genes that regulate the connection between neurons impact social behavior.

Taken together, Mara’s results point to multiple underlying factors that have a direct impact on social feeding behavior. In particular, she identified neurexin, neuroligin, and glutamate as key biological components that are important for this behavior. And importantly, some of these same biological factors have been implicated in human neurodevelopmental conditions, such as autism. Future experiments will build off Mara’s work to get a clearer picture of how social behavior emerges from these biological factors and even perhaps lead the way to the development of therapeutics to help improve people's lives.

About the brief writer: Jafar Bhatti

Jafar Bhatti is a PhD Candidate in the lab of Dr. Long Ding / Dr. Josh Gold. He is broadly interested in brain systems involved in sensory decision-making.

Interested In learning more? Check out the full paper here!

Brain cells communicate to help us find food

or technically,

Distinct neurexin isoforms cooperate to initiate and maintain foraging activity

[See original abstract on Pubmed]

Brandon Bastien was the lead author on this study. Brandon graduated from Swarthmore College with a degree in neuroscience with high honors. He then obtained his PhD in Neuroscience from the University of Pennsylvania in the Hart Lab and has continued his work as a postdoctoral researcher. Since joining the Hart lab, his focus has been on defining the circuitry underling how C. elegans respond to food deprivation stress and how certain synaptic proteins, some of which have orthologs in humans (like neurexins and casprs), and neuromodulators may distinctly act within this behavior-generating circuit. Outside of lab, he enjoys TV shows, football, Eurovision, and traveling.

or technically,

Distinct neurexin isoforms cooperate to initiate and maintain foraging activity

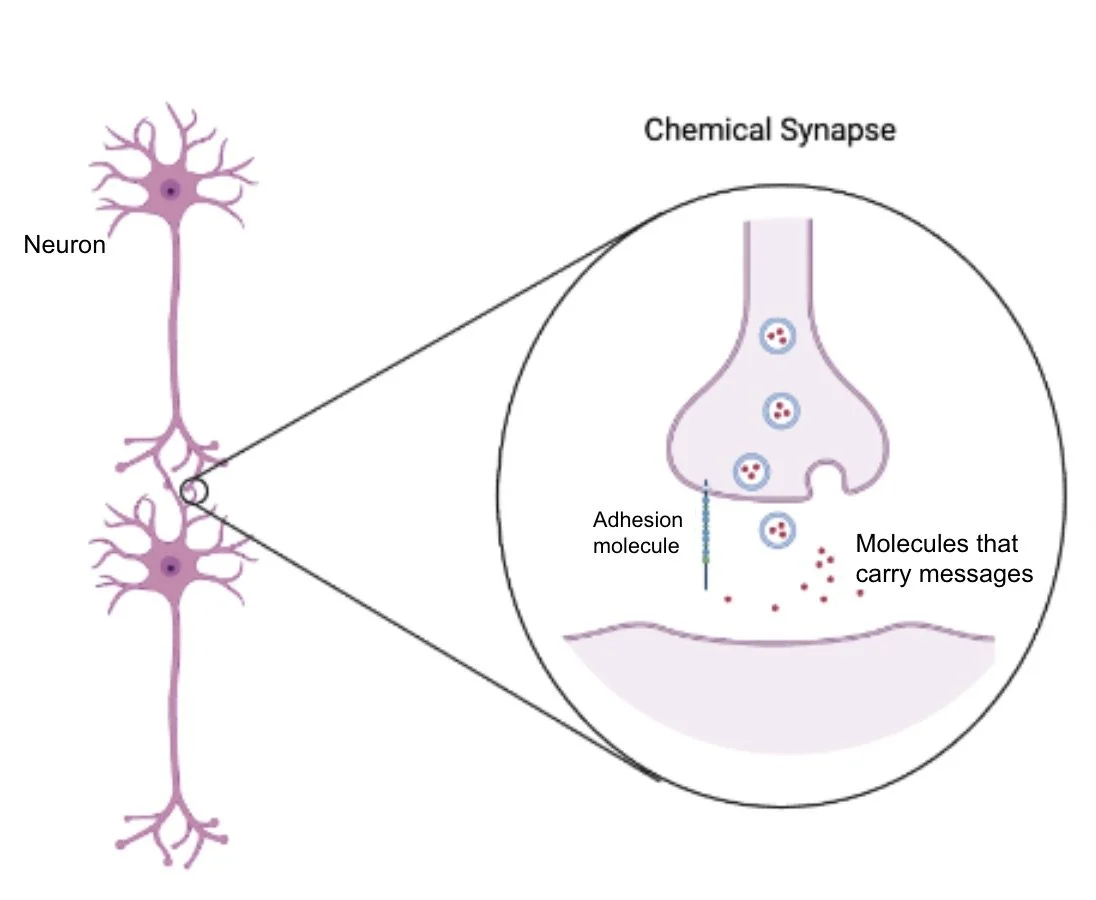

Figure 1: Neurons may communicate through chemical synapses. The adhesion molecules and molecules dictates the synaptic communication.

[See Original Abstract on Pubmed]

Authors of the study: Brandon L. Bastien, Mara H. Cowen, and Michael P. Hart

The World Jigsaw Puzzle Championship is an annual event where individuals, pairs, and teams compete to solve puzzles the fastest. In the final team round of the Puzzle Championship, groups race each other to solve two 1000 piece puzzles the fastest.

Neuroscientists around the world are also puzzlers, of a slightly different variety. The puzzles they aim to piece together are the human brain and other nervous systems/brains. The human brain contains billions of pieces each fitting together to reveal an image of who we are. In the brain, each piece is a cell, a basic unit. Just like border pieces and ribbon pieces are different types of puzzle pieces, the brain has different types of pieces/cells as well. One type of brain cell is called neurons. Neurons communicate with each other by forming connections through the small gaps called synapses. The main type of synapse is chemical synapses. In the chemical synapses, the brain uses molecules which are chemicals that are transferred from one neuron to another passing along messages. One neuron releases the chemicals which travel across a small gap before binding to the receptive neuron to transmit signal/information. Chemical synapses may be modified by structural properties, including how close together the neurons are at the synapse, how large the synapse is, and more. Synaptic structure is held together using adhesion molecules that form bridge-like structures that facilitate and modify communication at the synapse (Figure 1). The synaptic structures may modify how the chemical molecules messengers communicate with the receptive neuron. The string of specific neurons fitting in with each other makes neural circuits. The circuits have a function of regulating behaviors such as walking, eating, or sleeping. When neuroscientists are able to understand how our neuron pieces fit together through synapses to regulate behavior, for even a small part of the brain, it is also a win with the price of improving human lives.

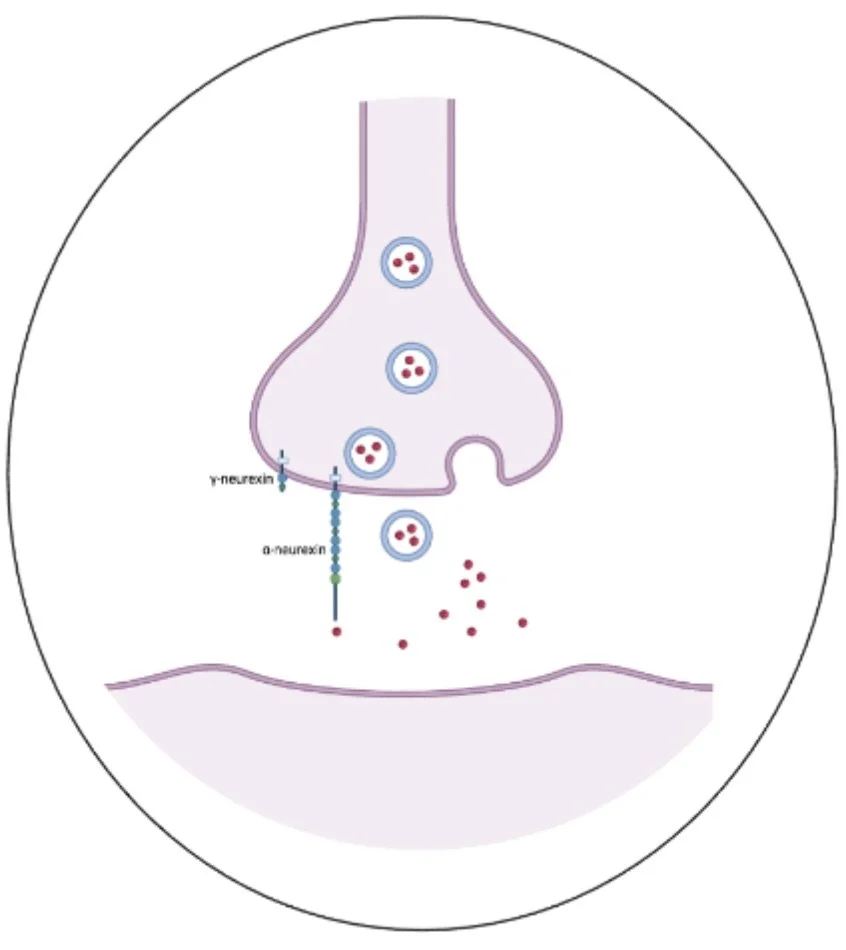

Figure 2: γ version and α isoforms of neurexin in a synaptic structure

Neurexin, a synaptic structure, forms part of the bridge between neurons to enable communication. There are different versions of neurexin. Alterations to neurexins affect many behaviors associated with neurodiversity, such as autism spectrum and schizophrenia. It is important to understand how neurons communicate through synapses to mediate circuits that regulate behaviors.

Brandon Bastien, a NGG graduate, focuses on understanding how brain pieces fit together through the synapse to regulate behavior, specifically foraging behavior. Foraging behavior, the search for food is an essential behavior that is conserved in all animals. Since the human brain has billions of pieces, Brandon uses a smaller animal, a small worm C. elegans that has just 302 puzzle pieces (compared to the human brain, think of this as a puzzle for a child). C. elegans also have conserved genetic or hereditary information, synaptic structure, synaptic molecules, and foraging behaviors. In the worm the piece that is known to drive foraging behavior is the pair of neurons named RIC (one on left and one on right). It was known that the RIC neurons use chemical synaptic messenger octopamine for foraging behavior. It was unknown if the synaptic cell adhesion structure neurexin is important for foraging behavior or the connections made to or by RIC neurons

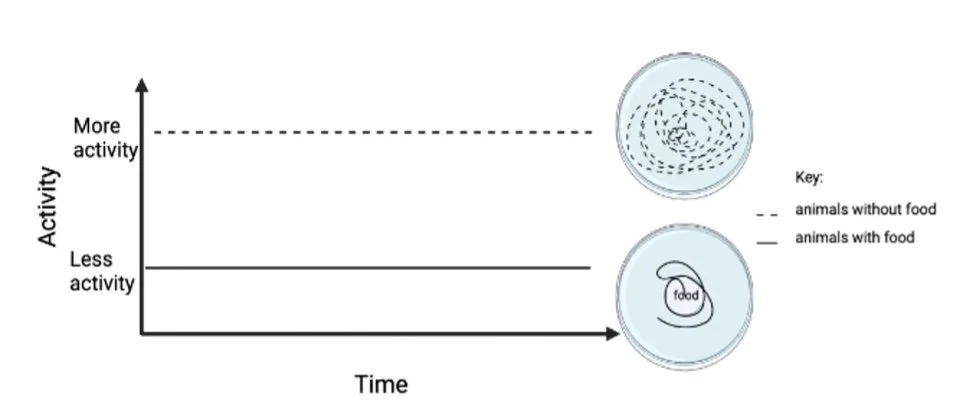

Brandon asked how the synaptic protein neurexin affects the foraging behavior of C. elegans. Two versions of neurexin in the human and the worm are called γ version and α isoforms (Figure 2). The two different versions are both located in the synapse and have shared parts, but are different sizes (one longer and one much shorter). He used genetic manipulations by deleting the different versions of neurexin hereditary information to test if neurexin modifies foraging behaviors. Foraging behavior, the search for food, is quantified by the high activity level of the animal. The worms were placed in environments without food and were videotapes with a microscope over the course of eight hours to evaluate their activity. Higher activity indicates food search and a lower activity indicates less food search. Similarly to humans, we will move source food when we are hungry. The movement of the worms off food was compared to animals that were on food. Animals without food will have a higher movement activity to look for food and the animal with food moved less since they did not need to find food (Figure 3 ).

Figure 3: animals that are food deprived have more activity than animals with food.

Brandon identified the roles for different neurexin versions in foraging behaviors by asking how the food searching changed when he deleted them. When the short γ version of neurexin was deleted, in the absence of food, the animals had a temporal effect. Within the first hour, animals that were missing the short γ version of neurexin and were food deprived had comparable movement when compared to animals that were fed. However, from hours 2-8, animals that were missing the short γ version of neurexin and were food deprived increased their movement significantly when compared to feed animals. This indicates that the γ version of neurexin is important for starting the food search behavior, but it is less important for maintaining the later hours of food search. When the α version of neurexin was deleted, in the absence of food the worm moved more than the worms that had food in the first hour in an attempt to find food, but then decreased their movement in the later hours for the entire eight hours. This implies that the α version of neurexin is important for maintaining food search behavior, but is less important for starting the food search behavior. This is the first time that the different versions of neurexin were found to both impact foraging behaviors. The different versions of neurexins have different functions in the food search behavior with γ version of neurexin being important for starting food search and the α version of neurexin being important to maintain continued food search (Figure 4). The different forms of neurexin cooperate through different functions and cooperate to start and continue this foraging behavior.

Figure 4: The γ version of neurexin is important for starting the food search behavior, while the α version of neurexin is important for maintaining food search behavior.

The next question Brandon asked was how were the different forms of the synaptic structure neurexin affecting different phases of the foraging behavior interact with the chemical synaptic signaling molecule octopamine. To test this question, Brandon used animals that were missing octopamine. In animals that were missing octopamine in the absence of food, the worms had less activity in the first two hours then had increased activity in the remaining eight hours when compared to animals on food. This indicates that the synaptic molecule octopamine is also important for starting food search behaviors, but is not required for maintaining food search behaviors. Therefore, both the γ version of neurexin and octopamine signaling are both essential for starting foraging behavior. Additionally, neurexin in neurons is needed for octopamine function. May want to mention that when branded deleted the alpha isoform, there were fewer RIC synapses - referring back to intro on chemical synapse structure?

Brandon has shown that RIC neurons have synapses that have synaptic structure neurexin and synaptic molecule octopamine that functions in regulating foraging behavior. In addition, the paper identified the different and collaborative roles the different forms of neurexin have in foraging behaviors with the γ version starting foraging behaviors and the α version of neurexin maintaining the foraging behavior in the absence of food. Lastly, Brandon showed that the synaptic structure neurexin is required for the synaptic molecule octopamine to function showing how team work in synapses regulates behavior. This work provides insights into how neurons form communication through synapses to alter circuits that regulate behavior. Neuroscientists have more work to do in understanding how the different puzzle pieces of our brain fits together.

About the brief writer: Sophia M. Villiere

Sophia is a PhD Candidate in Michael P. Hart’s lab in the Neuroscience Graduate Group at the University of Pennsylvania, studying how autism associated gene mutations affect neuron morphology and behavior.

Interested in learning more about foraging behavior? Click on Brandon’s full paper here

The inner workings of a rare childhood disease

or technically,

Altered lipid homeostasis is associated with cerebellar neurodegeneration in SNX14 deficiency

[See original abstract on Pubmed]

Vanessa Sanchez was the lead author on this study. As a scientist, Vanessa is passionate about understanding the cell biology of neurons, especially in the context of pediatric neurodevelopmental – degenerative disorders. Outside of lab, you can find Vanessa tending to her garden, trying new recipes, rock climbing, or hanging out with her cat, Jiji!

or technically,

Altered lipid homeostasis is associated with cerebellar neurodegeneration in SNX14 deficiency

[See Original Abstract on Pubmed]

Authors of the study: Yijing Zhou, Vanessa B Sanchez, Peining Xu, Thomas Roule, Marco Flores-Mendez, Brianna Ciesielski, Donna Yoo, Hiab Teshome, Teresa Jimenez, Shibo Liu, Mike Henne, Tim O'Brien, Ye He, Clementina Mesaros, Naiara Akizu

Neurons are special cells in our bodies that communicate with one another to help us do everyday things like eat, think and walk. Amazingly, for most healthy people, the neurons that we are born with will last our lifetimes and support us as we navigate the world. However, in some rare and unfortunate diseases, neurons die prematurely. These kinds of diseases are called neurodegenerative diseases. There are many different types of neurodegenerative disease, each targeting different groups of neurons and resulting in different symptoms. In 2014, a new and extremely rare neurodegenerative disease was discovered called SCAR20. SCAR20 was found to negatively affect newborn children by causing intellectual disability and impairing motor functions, like the ability to walk. Researchers were quickly able to identify the culprit of the disease: the total lack of a protein called SNX14. Since little is known about SNX14 and how its absence causes SCAR20, Vanessa Sanchez, a current NGG student, and her collaborators designed a study to learn more about the nature of this disease, with the hope that one day there might be a cure or treatment.

Figure 1. Key takeaways from Vanessa and colleagues’ experiments investigating the underlying causes of the SCAR20 disease.

To begin their investigation, Vanessa and her collaborators used genetic tools to remove the SNX14 protein from mice. Genetically modified mice are immensely useful in neuroscience research as they allow scientists to study the underlying causes of disease in detail. In this case, since the researchers removed a protein, the genetically modified mice are referred to as a knockout mouse model. After they generated their new knockout mice, Vanessa and her colleagues tested these mice to make sure that they had all of the symptoms that the children experienced. This was an important step in their study because they wanted to be sure that any discoveries that they make using the knockout mouse model are directly relevant for human patients. Vanessa and her colleagues compared the knockout mice to normal healthy mice and found a few convincing results (Figure 1, Healthy mouse vs. Knockout mouse). First, they found that knockout mice had a complete lack of SNX14 in their brains - the direct cause of SCAR20 in humans. Next, they found that knockout mice were smaller in size and had a structurally abnormal face - two known symptoms of SCAR20 in humans. Finally, they found that the knockout mice had worse social memory and motor ability compared to healthy mice - again, a clear-cut sign of SCAR20 in humans. Given these results, Vanessa and her colleagues were convinced that they had developed a good mouse model of the SCAR20 disease and were now able to investigate how the disease develops.

In order to gain insight into the underlying causes of the disease, Vanessa and her colleagues needed to narrow down their focus to a single brain area. In human patients, SCAR20 seems to preferentially kill neurons in a brain area known as the cerebellum. This brain area is typically thought to be involved in motor control and coordination, which might explain why SCAR20 patients have severe motor disability. Vanessa and her colleagues discovered that, just as in human SCAR20, the knockout mouse model also showed a preferential negative effect on the cerebellum of the mice. She found that both the number of neurons and the overall size of the cerebellum were reduced in the knockout mice compared to healthy mice, once again validating the model for the study of SCAR20 and identifying a key brain area to narrow in on.